The State of AI and Autonomy in Unmanned Ground Vehicles (UGV)

Part 2 of The AI & Autonomy Series

On March 12th, 2024, Ukraine’s Minister of Digital Transformation announced that Ukraine would shift to mass-production of robots in order to “minimize human involvement on the battlefield”. This strong market signal in “response to the numerical advantage of the enemy” led to a Cambrian Explosion in unmanned ground vehicles.

In December of 2024, the Khartiia Brigade of the National Guard of Ukraine reported an attack by the Ukrainian defense forces on the positions of Russians near the village of Lyptsi, located north of Kharkiv. The Units involved used dozens of unmanned ground vehicles, in conjunction with aerial reconnaissance, bomber and strike drones. What made this successful operation stand out, however, was not the (arguably impressive) presence of lots of unmanned vehicles, but what was absent, namely human soldiers.

In July 2025, the Ukrainian Third Assault Brigade used a combination of unmanned ground vehicles (UGVs) and drones to attack a Russian position. After UGVs and strike drones destroyed enemy bunkers, and a UGV carrying an anti-tank mine threatened the remaining soldiers, they surrendered by writing a message onto a piece of cardboard and placing it outside of their trench An overhead drone then guided the soldiers to Ukrainian lines, where they were taken prisoner without any Ukrainian infantry being exposed to fire.

The first-ever recorded capture of prisoners using only unmanned vehicles. Video: United24/Third Assault Brigade

Will unmanned ground vehicles replace soldiers on the battlefield? How hard is it to navigate the ground? How precisely can a gun kill autonomously? What are the challenges unique to autonomous movement on the ground of the battlefield? How far has autonomy developed, and how widely is it used today? What about Israel, China, the US, and Russia? Why do we hear so little about UGVs and autonomy from Russia? How does ground autonomy work across different mission profiles? What are the missing pieces for true ground autonomy? Which companies and countries are best positioned to close the gap, and why? What can the others do to catch up?

Let’s talk about autonomy in unmanned systems. Welcome to The AI & Autonomy Series, Part Two: Unmanned Ground Vehicles (UGV).

Robot Wars? These are of course remote-operated. Clip: Robot Wars Classics

What You Will Learn In This Deep Dive

Outline

Why We’re Here (and some predictions to start)

Pros and Cons of UGVs on the Battlefield

How Does Autonomous Driving Work (on the battlefield)?

The Grounding Challenges

Technologies & Techniques

The Chaos of the Battlefield

Autonomy Levels Revisited in Brief

Challenges and History of UGV Autonomy

Spotlight: Russia

Spotlight: China

Excursion: (Chinese) Legged Autonomy

Israel

UGV Autonomy by Mission Profile/Use Case

Logistics

Combat

ISR, EW & EOD (including mine-laying)

Conclusion

Recommendations

Ministries of Defense

Industry

Investors

Wrap-up & Outlook

Have you read Part One of the Autonomy Series? It contains valuable primers on the software, sensors, processing, data and machine learning for unmanned systems autonomy.

See the history section to find out what this is.

Outline

In order to define the state of the art of UGV autonomy, we will disseminate the pros and cons of unmanned ground systems, the challenges inherent to autonomy on the ground, the history of its development and today’s capabilities of some of the leading nations. Along the way, we will make grounded assumptions as to where some of the roadblocks lie, but also as to where some nations might be able to lead, despite not having proven themselves on a battlefield. Then, we will look into the future of the technology, and lastly ask ourselves what Ministries of Defense, industry and investors should and shouldn’t do, if they want to be ready for the next conflict and maximize ROI.

Guess the year this photo was taken. More on this in the history section.

“Quantity has a quality of its own.” - A page by page render of the Deep Dive you’re reading right now

Hello. Thank you for supporting my work. I provide technical knowledge and market intelligence about unmanned systems and AI for governments, OEMs and investors. If you’ve been following me on LinkedIn, you know about my passion for research and writing. This is the next chapter.

I write comprehensive deep dives (this one has more than 12.000 words) full of primary research, intelligence and experience I gather myself, from the offices of OEMs and startups to the forward edge of innovation in Ukraine.

They’re being read by MoDs, OEMs and SMEs, as well as their investors in PE, VC and banking. The TECH WARS Deep Dives take roughly a month to research and write (zero AI), are published bi-weekly, and are supported by my subscribers. Go ahead and give it a try - you can cancel risk-free anytime!

Why We’re Here (and some predictions to start)

Unmanned ground vehicles, while having a long history, don’t have a long history of successful implementation on any battlefields. Even some of the nations who are arguably masters at robotics and have seen active conflict engagements, like Israel and the United States, do not yet use ground robots widely. The question is why, and why that might be changing at a pace that rivals that of the sudden proliferation of unmanned aerial vehicles (UAV/UAS). I’d argue the following:

UGVs are clunky in more sense than one - bear with me here.

UGV autonomy is both hard and necessary.

So far, conflict engagements have not mirrored those in Ukraine.

I won’t spoil the above points by explaining them right at the start; robbing you of the (hopefully) pleasure of discovering the insights while reading this article. What I’ll say, however, is this: I expect UGVs to see similar success in production and deployment as UAVs, albeit not in a similar fashion and for the same set of mission profiles. I am also certain that we are only now seeing the first meaningful global market development, meaning we’re at the very beginning of the j-curve. I also believe that AI and autonomy will play major roles in the future success of these platforms, but that some of the major winners of this race might not be found in Ukraine.

Robot Wars? The state of UGV motion over obstacles. Video taken by the author in Ukraine

Pros and Cons of UGVs

Before we move on, let’s wrap up with some general observations of pros and cons of unmanned ground vehicles:

Platform disadvantages

Trust and cohesion being issues for adaptation

Breaking cover

Navigation errors

Shorter comms distances

Higher dangers for operators

Noise

Hence why direct support roles alongside frontline infantry are still lagging in development, and why many of the more sophisticated UGVs have hybrid motors

UAVs as the alternative for many mission profiles

Platform advantages

Very long loitering time

High payload

Easier to add armor

Higher range

Good integration into ground units (vs. larger drones)

Question marks? Unclear as to why I have listed some of those pros and cons? Unclear for which mission profiles UAVs are almost always the better choice? I’ll explain all of those in the article. But first, we need to talk about ground autonomy, and what makes it so special (and widely different from aerial autonomy).

One More Thing

Before we start, a little housekeeping as always. I’ll keep it ultra-short this time: THANK YOU. I am still overwhelmed by your continued, and significantly increased, support. I hear your feedback: this Deep Dive will be a bit more compact than the last one (-3.000 words), while trying to maintain the same information density and depth you are used to.

I want to make this a community-driven publication. This means you get to vote on what I write about. Founding Members also get to hang out with me in a monthly Q&A video call. I’m not writing these for myself, but for you, and I’m always open for suggestions, so feel free to send me a message anytime.

With my thanks to each and every one of you one more time, let’s get to it.

How Does Autonomous Driving Work (on the battlefield)?

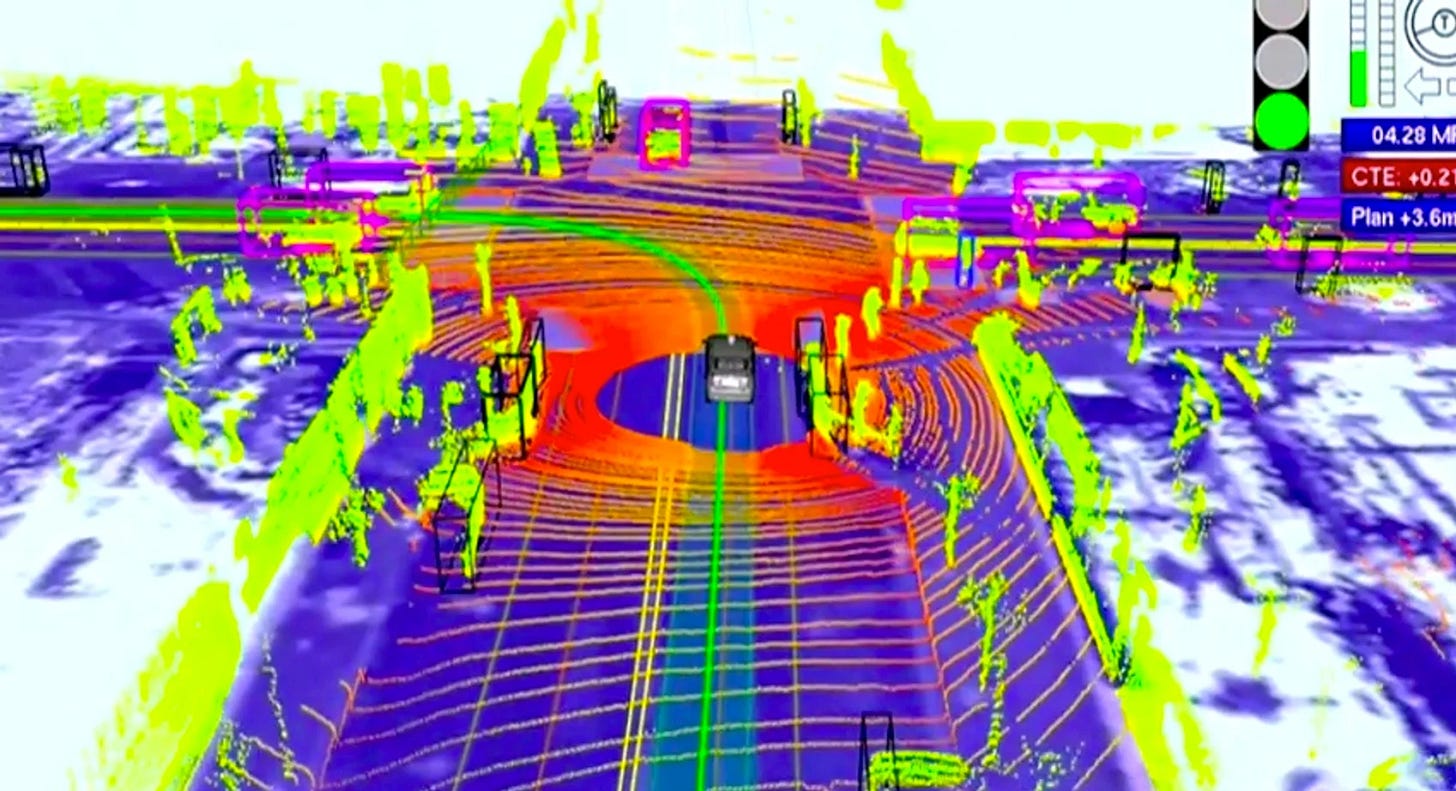

Visualization: How Google’s Waymo cars see the world with Lidar. Image: IEEE Spectrum/Google

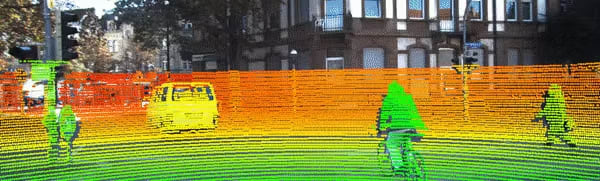

The ground is hard: what looks like a video game is actually Ukrainian UAVs wreaking havoc on a group of Russian volunteer-built combat UGVs. Photo: xronikabpla Telegram Channel

Noting one final time that it makes sense to revisit Part One of the Autonomy Series, if you’re unfamiliar with the basics of sensor fusion, computer vision, machine learning for mission planning and movement, and the processing requirements.

The Grounding Challenges

Take a look at the image underneath the headline of this section. The Waymo car whose sensing of its surroundings is being visualized there is using Lidar on top of other sensors (vision, orientation, compass, GNSS, speed, proximity...) to “see” and classify the world. Now take a look at the second picture. Multiple challenges should immediately come to mind:

The environment (ground, objects) in the lower image is way less controlled and permissive than a street.

The sizes and shapes of the surrounding objects are way less well known and predictable in the lower image compared to the image above.

The amount and kinds of inputs, processing and decision-making for a UGV to operate fully (Level 4) autonomously in the scenario below is extremely high.

To add insult to injury, UGVs like the ones in the image above are also at a dramatic systemic disadvantage: they have to be cheap, rugged and expendable.

But isn’t Tesla using vision only for its Full Self-Driving tech? Sorry, Elon, but we need to take a brief tour through the vision (Elon) vs. Lidar debate, and explain why using vision alone to navigate even permissive ground environments with markers, like public streets, has proven to be a dead end so far. And settle it, once and for all. Buckle up, because this will be a bit of a well-informed) rant.

Technologies and Techniques

Vision is poor for autonomous driving due to challenges like poor weather performance, difficulty with 3D perception, and inability to recognize novel or complex scenarios. Let’s go through the details of - while cameras are certainly needed for the operator to see what the UGV is seeing - why running machine learning to train models that perform even the simplest navigation tasks, let alone more complex ones, or targeting, fails when using vision only in ground environments:

Visibility: fog, rain, snow, foliage, insects, dust and mud are not a camera’s friend. Neither a machine-learning model’s. They are, however, constant company in ground-based navigation. This is exacerbated the closer you get to the ground. Not good for UGVs.

Lighting: Electro-optical (EO) sensors react miserably to glare from the sun (or deliberate dazzling). Telling objects apart in low-light conditions is hard enough for our ultra-high-resolution eyes and supercomputer-capable brains. Try a low-resolution sensor and shaky optics.

2D vs. 3D: Inferring depth is hard. It gets harder, the more detail you need to process. Want to auto-detect a small bit of an anti-person-mine (APM) sticking through the foliage? You better bring an NVIDIA H100 and a well-trained model based on unthinkable amounts of data. Before you’ve trained that you might have blown up an entire fleet of UGVs. Plus, inferring depth is a process which, compared to Lidar depth measurement, is and always will be inherently error-prone. Trust me, I’ve tried it myself. The amount of false positives in this process is haunting. Logically, the same is true for distance, and for determining the difference between an image of an object and an object.

Blind spots: This challenge can be overcome, but that makes the setup more costly again.

Fata morganas: Want to trick an EO- or IR-based algorithm? Partially cover or obscure the object you are trying to protect (like yourself). Not working? Cover more of the object. It is literally as easy as that. Want to distract the algorithm? Print a decoy. No need for fancy 3D decoys, either, because the algorithm will infer depth on the 2D decoy you have printed.

You could, of course, massively increase the amount of labeled data and compute you throw at the problem, which is exactly what Tesla is doing. Not only does this start to invert your cost-performance curve at some point. The results in the Tesla vs. Waymo race speak for itself.

Bats, man: a bat sees the world through echolocation. GIF: Science News

To summarize: ground-based autonomy based purely on vision is going to stay a dead end for a long time, due to the inherent limitations of both EO/IR input, and due to the sheer complexity of reality. The closer to the ground, the worse it gets. The more novel/complex and changing the environment, the worse it gets.

Does that mean vision is completely out of the picture for machine learning for ground-based autonomy? Of course not. It just means that vision alone won’t cut it.

Take Lidar as a counterexample: it creates high-resolution detailed 3D maps of its environment. As it turns out, the shape of the world can be lot more helpful when navigating it than the colors, contrasts and brightness (ask bats, which use echolocation, a kind of high-frequency sonar, to see the world). It doesn’t care about the lighting of a scene, or whether it’s foggy or not. It doesn’t need to guess the distance of an object, because it measures it. The same is true for its speed and direction - not irrelevant in a battlefield scenario. This data can be used to infer potential collisions (or hits), for example.

Lidar, which stands for light detection and ranging, uses pulses of laser light into the environment, and measuring the time it takes for the reflections to reflect off of objects and return to the sensor (also called a time-of-flight algorithm, or ToF), which allows it to create three-dimensional point clouds (also called dot maps) of its environment and the objects contained in it. Lidar (which is also written LiDAR) has come a long way in the past decade, not just in terms of compactness and affordability of systems (partially owed to semiconductor lasers or laser diodes, like the ones in your iPhone, which is made by Sony), but also in terms of on-chip (Lidars are often sold as systems-on-a-chip, or SOCs) algorithmic classification functionality, like semantic segmentation (of objects), and object recognition (human, car...) and -tracking. They also support Lidar-SLAM (simultaneous location and mapping; see also Part One), which allows for improved positioning data not just outdoors, but also indoors.

So, to conclude, while purely vision-based systems can work for the sky and maybe for highly permissive environments (visual odometry is used in navigation and autonomous target acquisition systems for simpler/cheaper drones like interceptors - and in Tesla’s “Full-Self Driving”), more sensors are needed for reliable positioning, mapping, navigation and movement - especially in more chaotic environments.

Lidar-camera fusion allows for more detailed maps of the environment. Animation: Google

Vision is not dead, of course. Operators will continue to want to see what their (self-driving) UGV is seeing. They will want to continue to confirm successful strikes/deliveries/operations. And vision can be very useful when combined with other sensors. As a matter of fact, Lidar-camera sensor fusion is a common method to construct a more detailed three-dimensional scene. So common that there are tons of freely available models on Github.

Then, there’s RGB-D. RGB-D cameras add a depth channel to the red, green and blue channels of regular EO sensors. This is being achieved by using ToF algorithms, either in combination with stereo vision (two cameras) or by pulsing light, usually infrared (IR). While it sounds like the perfect solution at first glance, it comes with its trade-offs. Because it is using visible light and IR, instead of high-powered focused lasers, it is susceptible to lighting conditions like other cameras. While it can provide higher-resolution data at short distances (all of this depends on the sensor, algorithm and processing, of course), its accuracy falls of sharply with distance in comparison to the laser-based Lidar. It also produces more noise, given the physical parameters of EO sensors, and is sensitive to glare, which is why it is generally used more for indoor applications, like room mapping and positioning (using odometry), for instance for augmented reality applications.

The fusion of both technologies, RGB-D and Lidar, used in conjunction with accelerometers like intertial measurement units (IMU), global navigation satellite system (GNSS; e.g. GPS), odometry (encoding motion to determine the distance between two frames or snapshots of the environment) and SLAM is where robotic ground autonomy gets a bit more exciting. Add radar to the mix (radar works similar to the aforementioned echolocation used by bats by measuring the time it takes for radio waves to return to the sensor/emitter, and by calculating the Doppler Effect or frequency shift), and things get even more exciting. There are two important caveats, though:

Beginning of paid content below this line. Note that that’s still more than three quarters (9.000 words) of this article. It’s worth it. Give it a try!